Elastic, The Open Source Elastic Stack, Reliably and securely take data from any source, in any format, and search, analyze, and visualize it in real time.

ElasticSearch

1 | |

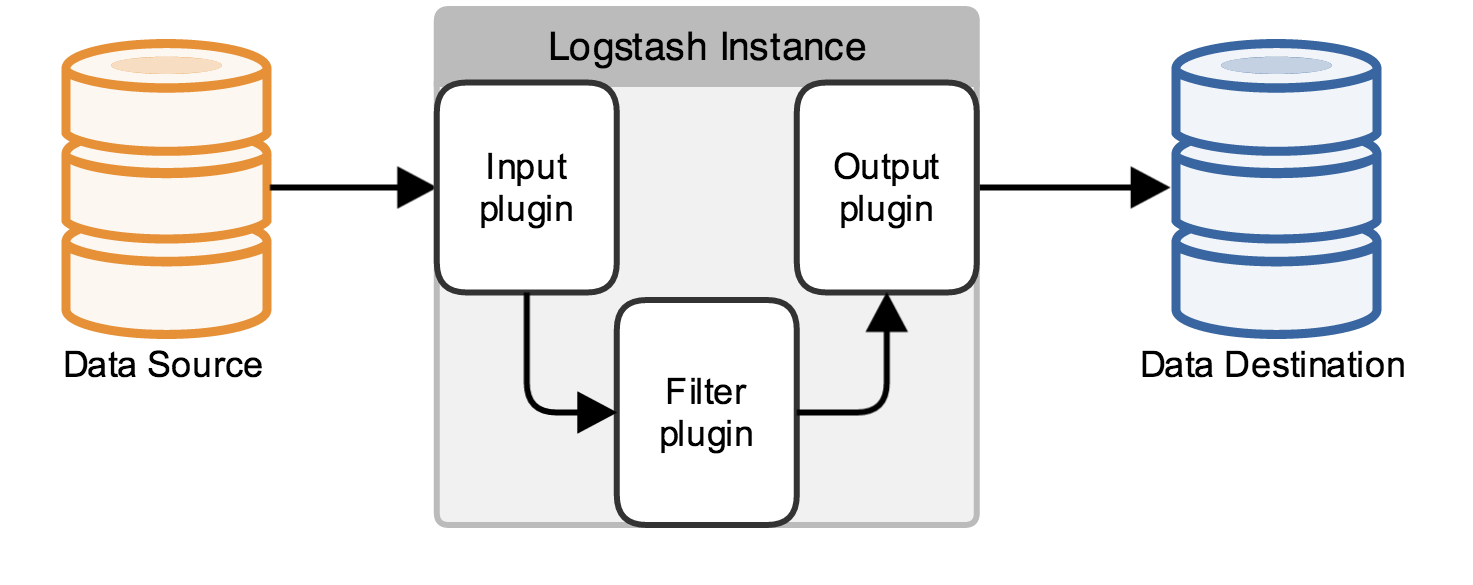

Logstash

help

1 | |

1 | |

1 | |

plugins

1 | |

input

log4j input plugin

log4j input plugin,基于tcp协议传输日志(org.apache.log4j.spi.LoggingEvent),已不被推荐,使用filebeat替代。

logstash.conf(log4j plugin):

1 | |

log4j.properties

1 | |

filter

grok

grok parse arbitrary text and structure it. Grok works by combining text patterns into something that matches your logs.

The syntax for a grok pattern is %{SYNTAX:SEMANTIC:DATA_TYPE}, SYNTAX is the name of the pattern (shipped pattern),SEMANTIC is the identifier or field_name, DATA_TYPE is the object data type that conversion from string to.

custom pattern

-

(?<field_name>pattern)配置:

1 | |

1 | |

-

patterns_dirlogstash根目录新建pattern目录,pattern目录下新建文件,例如:custom-pattern,内容格式:

<pattern_name> <pattern>,例如:1

2STR [0-9A-F]{1,3} STR6 [0-9A-F]{1,6}配置:

1

2

3

4grok { patterns_dir => ["./patterns"] match => { "message" => "%{STR:duration} %{STR:client}" } }结果:

1

2

3

4

5

6

7

8

9

10

11123 456 { "duration" => "123", "@timestamp" => 2017-11-09T01:46:06.025Z, "@version" => "1", "host" => "angi-deepin", "client" => "456", "message" => "123 456", "type" => "stdin" }这种形式自定义的pattern可以被复用。

-

pattern_definitionspattern_definitions值类型为hash,key为pattern_name,value为pattern。

配置:

1

2

3

4grok { pattern_definitions => { "STR9" => "[0-9A-F]{1,9}"} match => { "message" => "%{STR9:duration} %{STR9:client}" } }结果:

1

2

3

4

5

6

7

8

9

10123456789 987654321 { "duration" => "123456789", "@timestamp" => 2017-11-09T01:56:41.810Z, "@version" => "1", "host" => "angi-deepin", "client" => "987654321", "message" => "123456789 987654321", "type" => "stdin" }这种形式自定义的pattern只有当前grok可用

GEO IP

fingerprint

mutate

date

match

output

Beats

Filebeat

轻量型日志采集器,当您要面对成百上千、甚至成千上万的服务器、虚拟机和容器生成的日志时,请告别 SSH 吧。Filebeat 将为您提供一种轻量型方法,用于转发和汇总日志与文件,让简单的事情不再繁杂。

filebeat.yml

1 | |

1 | |

1 | |

logstash.conf(filebeat):

1 | |

Metricbeat

Metricbeat,轻量型指标采集器,用于从系统和服务收集指标。从 CPU 到内存,从 Redis 到 Nginx,Metricbeat 能够以一种轻量型的方式,输送各种系统和服务统计数据。

Packetbeat

Packetbeat,轻量型网络数据采集器,用于深挖网线上传输的数据,了解应用程序动态。Packetbeat 是一款轻量型网络数据包分析器,能够将数据发送至 Logstash 或 Elasticsearch。

Winlogbeat

轻量型 Windows 事件日志采集器,用于密切监控基于 Windows 的基础架构上发生的事件。Winlogbeat 能够以一种轻量型的方式,将 Windows 事件日志实时地流式传输至 Elasticsearch 和 Logstash。

Heartbeat

Heartbeat,轻量型运行时间监控采集器,通过主动探测来监控服务可用性。Heartbeat 面对一系列 URL 时会问这个简单的问题:你还活着吗?Heartbeat 会将这些信息和响应时间输送至 Elastic Stack 的其他部分,以做进一步分析。

Kibana

config/kibana.yml

1 | |

1 | |